Kubernetes 安裝

- Category: 電腦相關

- Last Updated: Monday, 24 September 2018 12:56

- Published: Tuesday, 05 June 2018 12:35

- Written by sam

今日測試Kubernetes使用kubeadm工具建立群組

基本資訊

192.168.9.134 master Ubuntu 16.04.4 LTS

192.168.9.135 node Ubuntu 16.04.4 LTS

192.168.9.136 node Ubuntu 16.04.4 LTS

設定

安裝好系統並記得更新

修改

root@ubuntu134:~# vi /etc/network/interfaces

依資訊修改主機至

root@ubuntu134:~# vi /etc/hosts

加入來源點Docker

root@ubuntu134:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add -

root@ubuntu134:~# add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"加入來源點Kubernetes

root@ubuntu134:~# curl -s "https://packages.cloud.google.com/apt/doc/apt-key.gpg" | apt-key add -

root@ubuntu134:~# echo "deb http://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list關閉swap(也可以在kubernetes手動設定略過)

root@ubuntu134:~# swapoff -a &;& sysctl -w vm.swappiness=0

vm.swappiness = 0

更新

root@ubuntu134:~# apt-get -y update

我沒有指定版本,直接使用最新版

如需指定可以使用

export 固定版本

Master

root@ubuntu134:~# apt-get -y install kubelet kubeadm kubectl docker-ce

Node

root@ubuntu135:~# apt-get -y install kubelet kubeadm docker-ce

Master

oot@ubuntu134:~# kubeadm token generate #也可直接下一步驟

hkzkuc.yst1zi5cnhy2b3bx

root@ubuntu134:~# kubeadm init --pod-network-cidr 172.24.0.0/16

記下這行指令

kubeadm join 192.168.9.134:6443 --token kxusrz.j1t4gwdkn1kkwp8g --discovery-token-ca-cert-hash sha256:f81ec68a9f49ec6f07ebdb76395d4d672dc5ffc17f6f6962f2d70303eac58cf9

root@ubuntu134:~# mkdir -p $HOME/.kube

root@ubuntu134:~# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@ubuntu134:~# chown $(id -u):$(id -g) $HOME/.kube/config

root@ubuntu134:~# kubectl get node

NAME STATUS ROLES AGE VERSION

ubuntu134 NotReady master 3m v1.10.3

root@ubuntu134:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-ubuntu134 1/1 Running 0 4m 192.168.9.134 ubuntu134

kube-apiserver-ubuntu134 1/1 Running 0 4m 192.168.9.134 ubuntu134

kube-controller-manager-ubuntu134 1/1 Running 0 5m 192.168.9.134 ubuntu134

kube-dns-86f4d74b45-cqcn5 0/3 Pending 0 5m <none> <none>

kube-proxy-c4b88 1/1 Running 0 5m 192.168.9.134 ubuntu134

kube-scheduler-ubuntu134 1/1 Running 0 5m 192.168.9.134 ubuntu134

確實有起了服務

再來是網路

因為我的不是預設值10.244.0.0/16

所以先抓下檔案來修改再套用

###20180924###

新版需要有新的認證rbac

root@ubuntu134:~# wget https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

root@ubuntu134:~# vi kube-flannel.yml修改net-conf.json行

root@ubuntu134:~# kubectl apply -f ./kube-flannel.yml

clusterrole.rbac.authorization.k8s.io "flannel" created

clusterrolebinding.rbac.authorization.k8s.io "flannel" created

serviceaccount "flannel" created

configmap "kube-flannel-cfg" created

daemonset.extensions "kube-flannel-ds" created

root@ubuntu134:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-ubuntu134 1/1 Running 0 7m 192.168.9.134 ubuntu134

kube-apiserver-ubuntu134 1/1 Running 0 7m 192.168.9.134 ubuntu134

kube-controller-manager-ubuntu134 1/1 Running 0 8m 192.168.9.134 ubuntu134

kube-dns-86f4d74b45-cqcn5 0/3 Pending 0 8m <none> <none>

kube-flannel-ds-h9wbd 1/1 Running 0 16s 192.168.9.134 ubuntu134

kube-proxy-c4b88 1/1 Running 0 8m 192.168.9.134 ubuntu134

kube-scheduler-ubuntu134 1/1 Running 0 7m 192.168.9.134 ubuntu134

多了flannel

查看網路相關是否有多了flannel

root@ubuntu134:~# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 00:0c:29:f7:9c:07 brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:40:bf:02:72 brd ff:ff:ff:ff:ff:ff

4: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether 2e:18:22:92:70:9f brd ff:ff:ff:ff:ff:ff

5: cni0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 0a:58:ac:18:00:01 brd ff:ff:ff:ff:ff:ff

6: veth92baa0ca@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue master cni0 state UP mode DEFAULT group default

link/ether 52:2a:57:f9:76:6c brd ff:ff:ff:ff:ff:ff link-netnsid 0root@ubuntu134:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.9.8 0.0.0.0 UG 0 0 0 ens33

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.24.0.0 0.0.0.0 255.255.255.0 U 0 0 0 cni0

192.168.9.0 0.0.0.0 255.255.255.0 U 0 0 0 ens33多了cni

root@ubuntu134:~# ip route

default via 192.168.9.8 dev ens33 onlink

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 linkdown

172.24.0.0/24 dev cni0 proto kernel scope link src 172.24.0.1

192.168.9.0/24 dev ens33 proto kernel scope link src 192.168.9.134

等待一下

root@ubuntu134:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-ubuntu134 1/1 Running 0 12m 192.168.9.134 ubuntu134

kube-apiserver-ubuntu134 1/1 Running 0 11m 192.168.9.134 ubuntu134

kube-controller-manager-ubuntu134 1/1 Running 0 12m 192.168.9.134 ubuntu134

kube-dns-86f4d74b45-cqcn5 3/3 Running 0 12m 172.24.0.2 ubuntu134

kube-flannel-ds-h9wbd 1/1 Running 0 4m 192.168.9.134 ubuntu134

kube-proxy-c4b88 1/1 Running 0 12m 192.168.9.134 ubuntu134

kube-scheduler-ubuntu134 1/1 Running 0 12m 192.168.9.134 ubuntu134全都是Running

加入node

root@ubuntu135:~# kubeadm join 192.168.9.134:6443 --token kxusrz.j1t4gwdkn1kkwp8g --discovery-token-ca-cert-hash sha256:f81ec68a9f49ec6f07ebdb76395d4d672dc5ffc17f6f6962f2d70303eac58cf9

root@ubuntu136:~# kubeadm join 192.168.9.134:6443 --token kxusrz.j1t4gwdkn1kkwp8g --discovery-token-ca-cert-hash sha256:f81ec68a9f49ec6f07ebdb76395d4d672dc5ffc17f6f6962f2d70303eac58cf9root@ubuntu134:~# kubectl get node

NAME STATUS ROLES AGE VERSION

ubuntu134 Ready master 14m v1.10.3

ubuntu135 NotReady <none> 26s v1.10.3

ubuntu136 NotReady <none> 25s v1.10.3至此完成安裝

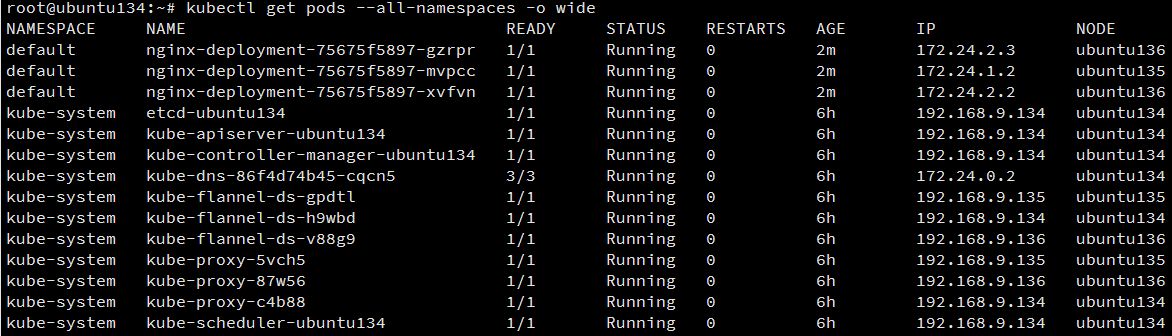

root@ubuntu134:~# kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE

etcd-ubuntu134 1/1 Running 0 15m 192.168.9.134 ubuntu134

kube-apiserver-ubuntu134 1/1 Running 0 15m 192.168.9.134 ubuntu134

kube-controller-manager-ubuntu134 1/1 Running 0 15m 192.168.9.134 ubuntu134

kube-dns-86f4d74b45-cqcn5 3/3 Running 0 15m 172.24.0.2 ubuntu134

kube-flannel-ds-gpdtl 1/1 Running 0 1m 192.168.9.135 ubuntu135

kube-flannel-ds-h9wbd 1/1 Running 0 7m 192.168.9.134 ubuntu134

kube-flannel-ds-v88g9 1/1 Running 0 1m 192.168.9.136 ubuntu136

kube-proxy-5vch5 1/1 Running 0 1m 192.168.9.135 ubuntu135

kube-proxy-87w56 1/1 Running 0 1m 192.168.9.136 ubuntu136

kube-proxy-c4b88 1/1 Running 0 15m 192.168.9.134 ubuntu134

kube-scheduler-ubuntu134 1/1 Running 0 15m 192.168.9.134 ubuntu134通通都有了,並且是Running狀態

幾個除錯

如果看到一直都是ContainerCreating

kube-dns-2924299975-prlgf 0/4 ContainerCreating 這個有幾個可能,一個是資源不足,另個是當初是"未"使用預設網段,但在init時,忘了指定需求網段

root@ubuntu134:~# kubectl get nodes -o jsonpath='{.items[*].spec.podCIDR}'

172.24.0.0/24 172.24.1.0/24 172.24.2.0/24

看有無輸出正確的自訂cidr到node去下ip link指令時,會發現沒有建立相關device & route

檢查有誤的的kube-system 裡的log,看看有無正常建立

kubectl -n kube-system logs -c kube-flannel kube-flannel-ds-gpdtl...

I0604 10:22:50.842686 1 iptables.go:115] Some iptables rules are missing; deleting and recreating rules

I0604 10:22:50.842741 1 iptables.go:137] Deleting iptables rule: -s 172.24.0.0/16 -d 172.24.0.0/16 -j RETURN

I0604 10:22:50.844253 1 iptables.go:115] Some iptables rules are missing; deleting and recreating rules

I0604 10:22:50.844595 1 iptables.go:137] Deleting iptables rule: -s 172.24.0.0/16 -j ACCEPT

I0604 10:22:50.847023 1 iptables.go:137] Deleting iptables rule: -d 172.24.0.0/16 -j ACCEPT

I0604 10:22:50.848480 1 iptables.go:125] Adding iptables rule: -s 172.24.0.0/16 -j ACCEPT

I0604 10:22:50.851506 1 iptables.go:125] Adding iptables rule: -d 172.24.0.0/16 -j ACCEPT

I0604 10:22:51.846651 1 iptables.go:137] Deleting iptables rule: -s 172.24.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

I0604 10:22:51.943997 1 iptables.go:137] Deleting iptables rule: ! -s 172.24.0.0/16 -d 172.24.1.0/24 -j RETURN

I0604 10:22:51.945753 1 iptables.go:137] Deleting iptables rule: ! -s 172.24.0.0/16 -d 172.24.0.0/16 -j MASQUERADE

I0604 10:22:51.947817 1 iptables.go:125] Adding iptables rule: -s 172.24.0.0/16 -d 172.24.0.0/16 -j RETURN

I0604 10:22:52.045275 1 iptables.go:125] Adding iptables rule: -s 172.24.0.0/16 ! -d 224.0.0.0/4 -j MASQUERADE

I0604 10:22:52.142481 1 iptables.go:125] Adding iptables rule: ! -s 172.24.0.0/16 -d 172.24.1.0/24 -j RETURN

I0604 10:22:52.146515 1 iptables.go:125] Adding iptables rule: ! -s 172.24.0.0/16 -d 172.24.0.0/16 -j MASQUERADE

要把Node重來的話請下指令(再不行的話…可以加上--force

root@ubuntu134:~# kubectl delete node node2

再來是到node清除相關設定

kubeadm resetroot@ubuntu134:~#ifconfig cni0 downroot@ubuntu134:~#ip link delete cni0root@ubuntu134:~#ifconfig flannel.1 downroot@ubuntu134:~#ip link delete flannel.1root@ubuntu134:~#rm -rf /var/lib/cni/root@ubuntu134:~#

這樣就會變成全新的了

再來就是建立pod & service(下篇...